How Netflix's rating system is hurting our documentaries

The Good, The Bad, and Nothing Else

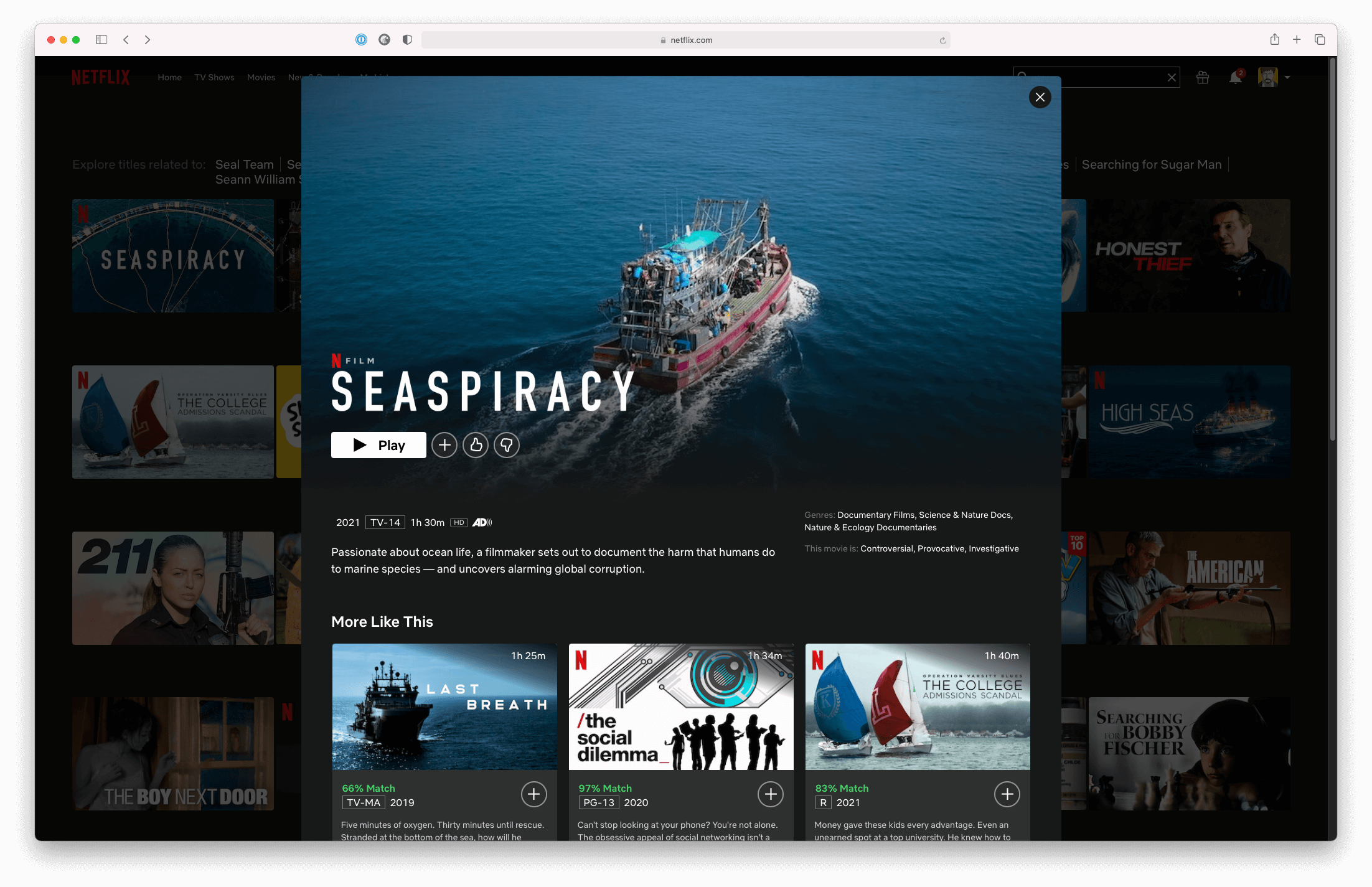

After watching Seaspiracy, Netflix's hotly debated documentary about the unsustainable practices of industrial fisheries, my mixed feelings about the limitations of the platform’s thumbs-based rating system were vividly revived.

I found Seaspiracy to be sensationalist, highly biased, and in some cases factually problematic. (Since I’m not here to explain these problems, I invite you to read what marine biologists had to say about it here and here.) Given that I absolutely want to learn more about the environment, my dilemma boils down to two conflicting messages I want to convey to Netflix through my rating of the documentary:

- I DO want more of this subject

- I DON’T want more of this style

However, there’s no way I can do that with a simple binary system. And I’m not alone in disliking the thumbs-based rating for its lack of nuance. In fact, it was immediately dismissed by several publications when Netflix moved away from 5-star ratings back in 2017.

Yet, complaints in the media based on Twitter and Reddit posts do little more than leave journalists with egg on their face against a company as data-driven as Netflix. In an interview with Variety, Todd Yellin, Netflix VP of Product, said the thumbs-based rating got 200% more engagement than the star system in user tests.

Unfortunately, this pursuit of lower friction in every possible digital interaction, which I've written about before, ends up limiting user control. This is exemplified in the concluding paragraphs of the Variety article:

Yellin said that the company completely relied on its users rating titles with stars when it began personalization some years ago. At one point, it had over 10 billion five-star ratings, and more than 50% of all members had rated more than 50 titles.

However, over time, Netflix realized that explicit star ratings were less relevant than other signals. Users would rate documentaries with 5 stars, and silly movies with just 3 stars, but still watch silly movies more often than those high-rated documentaries.

“We made ratings less important because the implicit signal of your behaviour is more important,” Yellin said.

Essentially, Netflix is forgoing the user's ability to express something more complex in favour of an opaque algorithmic recommendation. Explicit user input is dumbed down to two options and buried beneath a pile of other data that lacks transparency about how it's interpreted. Though Netflix's website has a description of how it recommends movies, it focuses on what is collected but not how it’s used:

We estimate the likelihood that you will watch a particular title in our catalog based on a number of factors including: your interactions with our service (such as your viewing history and how you rated other titles), other members with similar tastes and preferences on our service, and information about the titles, such as their genre, categories, actors, release year, etc.

In addition to knowing what you have watched on Netflix, to best personalize the recommendations we also look at things like: the time of day you watch, the devices you are watching Netflix on, and how long you watch.

All of this brings me to my frustration with Seaspiracy and my growing dissatisfaction with Netflix ratings. Because Netflix prioritizes quantity of interactions rather than quality, we’re now stuck with a limited form of expression.

This would be mildly irritating if it hindered my ability to find the right movie on a lazy evening. But it raises a plethora of problems for educational content, particularly now that Netflix is a producer and not just a curator. Our opaquely interpreted data is being translated into major financial investments that determine what information people consume regarding global crises like climate change.

For my rating of Seaspiracy, I have two separate opinions about the content and style which I can’t express. Instead, the thumbs system leaves me with two vague options:

- Thumbs up: I like this

- Thumbs down: Not for me

Ok... But what do I like about it? What about it is not for me?

Technologists will tell me to sit back and let the machines take care of it. Afterall, the algorithms are complex and dreamed up by some of the best engineers in the world. Arguably, I could watch a bunch of other documentaries and give them all thumb ratings, and eventually the collection of data would allow the machine to deduce more nuance about my opinion. But given how machines with even the largest datasets can interpret them so poorly, I would rather avoid that scenario. It seems like a frustrating user experience more than anything else. To quote Dave Smith, a writer for Business Insider:

It feels almost antithetical to deprive people of choice in an age where people crave more nuance and information, where even Facebook's "Like" button is now supplemented by other emotions.

Though he said this in 2017, it feels all the more relevant today. What’s more, data from a 2018 study showed that Facebook's new reactions increased engagement by 433%! And while these reactions have their own limitations, when combined with more transparency about how other user actions are interpreted, this rating system could lead the way to a new kind of relationship between users and private companies that prioritizes digital well-being.

Moreover, they could adapt the reaction options they provide based on the type of content. Documentaries could be rated based on whether they’re exciting, accessible, boring or questionable, while movies ratings could be about story, entertainment value, pacing or acting.

As our technology improves and new generations are increasingly comfortable interacting with it, the excuses to keep treating users like they don't know what's best for them will become more unacceptable. It's going to take innovation and bold risks to introduce new systems that help users express what they truly want from our complex internet services, but we’ll be all the better for it in the long run.