Personalization didn’t have to suck

In an attempt to peel away from shady data practices, I’ve started using more private browsers, opting out of data sharing on websites, and logging out of my Google account and social media to minimize cross-site tracking. For the most part, the only immediately noticeable thing that has come out of this is that my ads are less relevant, but I still see the same amount. However, a side-effect I didn’t expect to miss as much is the personalization of the service itself.

Now, my YouTube recommendations have become a lot worse, searching is more cumbersome without some amount of recent history, and my email inboxes are stupider.

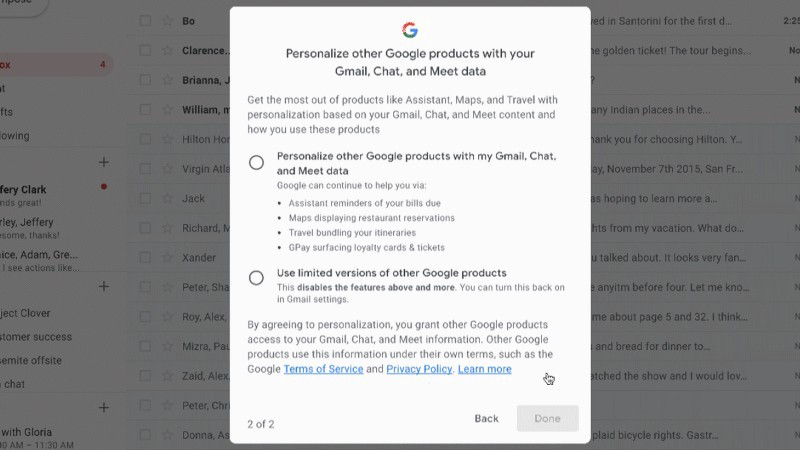

If you’ve logged into Gmail recently, you’ve probably seen the pop-up above. Google effectively gave its users an ultimatum: Give us your data for ads or suffer the consequences of dumb tech.

What’s interesting about this is the way they frame it. Google claims the data they need to make Gmail smart is necessarily related to how your ads are personalized. Of course, this is 100% false. Google could change their business model and charge users for these smart features, sans ads. It would be a difficult undertaking, no doubt, but it’s certainly doable.

Unfortunately Google, like most of the major tech companies, isn’t genuinely interested in personalizing your online experience. Personalization was just a fantastic way to gain insight into our cognition. And by making us feel understood and timing things correctly, we humans become much more likely to buy.

The problem lies not in the data collection itself, but in the way companies leverage that information to abuse human cognition in order to maximize profits. Yes, I am using the word abuse. If this is rubbing you the wrong way I can direct you to many books, and even a documentary, that have been dedicated to this topic.

As a society, we’ve found some wonderful ways to dance around this by using language that creates a distinct separation between the algorithms and two groups of people:

- Its creators, who we continually fail to acknowledge as responsible when algorithms fail

- Its users, who we constantly try to blame for being addicted to their devices as if the algorithms have always existed and are only now a problem

It’s a shame we had to end up here. Quite a few of the personalization features have been incredibly useful, but I just can’t handle the trade-offs anymore. It seems like I’m not alone. At the end of this post is an article that makes several interesting points about the potential end of online advertising. I strongly suggest that anyone interested in this topic give it a read.

In the meantime, I wanted to make one thing clear about my data exodus to the people building these algorithms: It’s not personal. It’s ads.

Further reading

- The Ad-Based Internet Is About to Collapse. What Comes Next? by Paris Marx

- Weapons of Math Destruction by Cathy O’Neil

- The Hype Machine by Sinan Aral

- Hello World by Hannah Fry